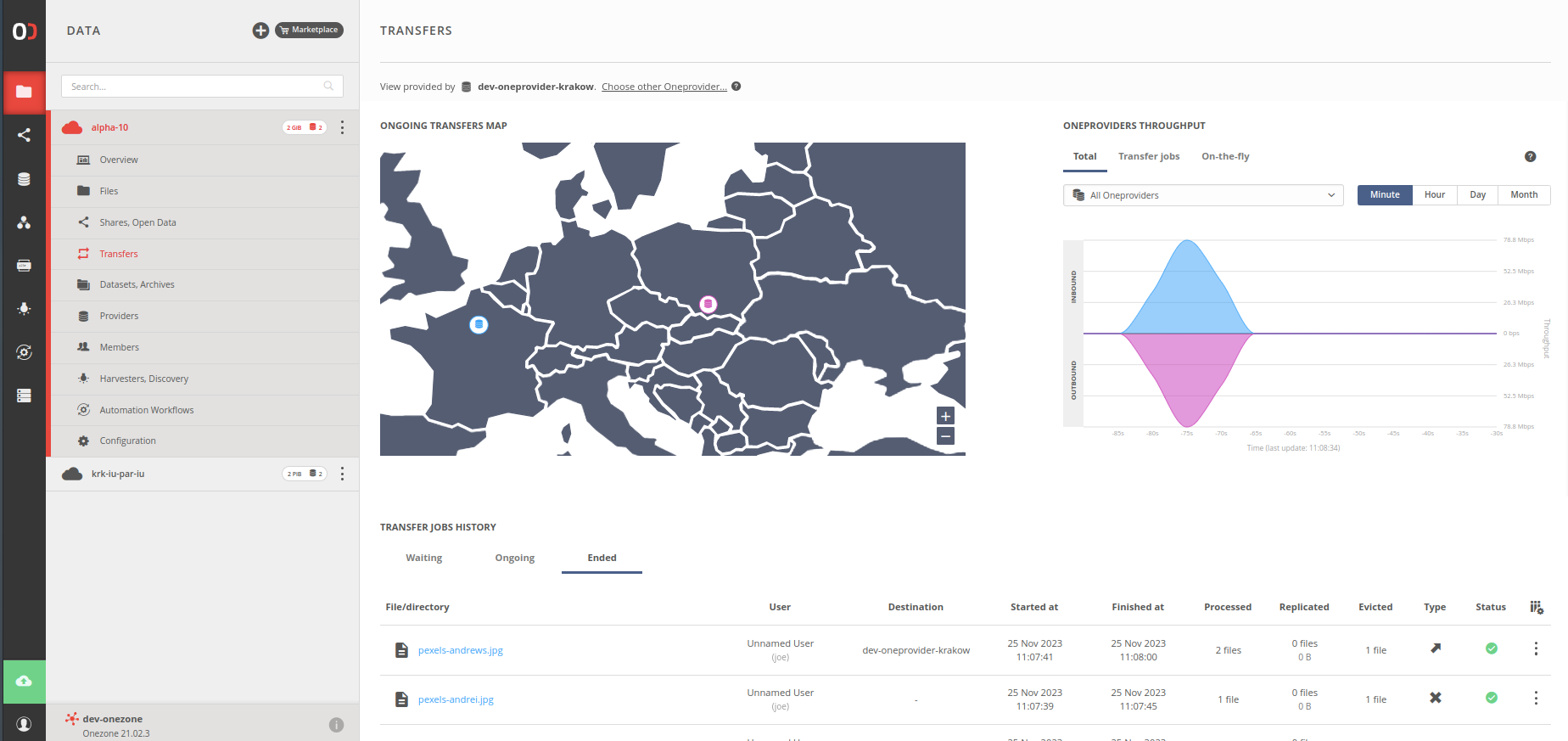

Data transfers history

Information about scheduled, ongoing, and historical data transfers is available in the

dedicated transfers view. To navigate there, click on Transfers in the sidebar of the

space alpha-11p.

Take some time to look around, examine the statistics, and see details of chosen

transfers — click to expand.